Governor’s Office issues initial generative artificial intelligence report on Tuesday

SACRAMENTO, Calif. – On Tuesday, the Governor's Office released a report about the emerging possibilities and potential pitfalls of generative artificial intelligence.

The benefits and risks-focused intelligence report is the first of a series of informative reports from California's state government required by Executive Order N-12-23 issued on Sep. 6 of this year.

“California can pioneer thoughtful and innovative approaches to GenAI adoption in state government,” said Secretary Amy Tong, leader of the multi-agency team that delivered the report. “Through careful use and well-designed trials, we will learn how to deploy this technology effectively to make the work of government employees easier and improve services we provide to the people of California.”

California is particularly important when it comes to artificial intelligence research.

UC Berkeley's College of Computing, Data Science, and Society; Stanford University's Institute for Human-Centered Artificial Intelligence; and UC Santa Barbara's Center for Responsible Machine Learning are all at the forefront of academic research into artificial intelligence and 35 of Forbes' AI 50 list are headquartered in California.

Although this is the initial report issued on the topic, there are four interim principles applied by the report regarding the use of generative artificial intelligence or GenAI:

- To protect the safety and privacy of Californians’ data, and consistent with state policy–state employees should only use state-provided, enterprise GenAI tools on State-approved equipment for their work

- Under no circumstances should state employees provide state or Californians’ resident data to a free, publicly available GenAI solution like ChatGPT or Google Bard, or use these unapproved GenAI applications or services on a State computing device

- It is important to provide a plain-language explanation of how GenAI systems factor into delivering a state service and disclose when content is generated by GenAI

- State supervisors and employees should also review GenAI products for accuracy and make sure to paraphrase rather than use AI-generated text, audio, or images verbatim

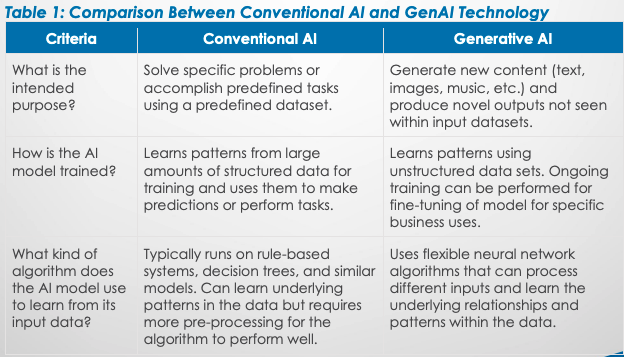

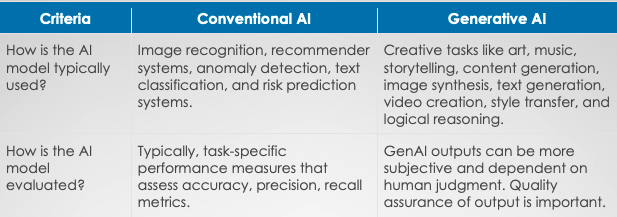

One of the early portions of the report detailed the difference between emerging generative artificial intelligence and conventional artificial intelligence.

While generative artificial intelligence is currently relegated to standalone applications, like ChatGPT or Dall-E for example, conventional artificial intelligence is already integrated into some industries such as robotic process automation, fraud detection tools, image classification systems, interactive voice assistants, and recommendation engines.

Below is a comparison guide between the two used in the aforementioned report:

The most notable difference is that while conventional artificial intelligence relies on limited data sets for a few specific purposes, generative artificial intelligence uses large-scale machine-learning models with more general capabilities.

As these machine-learning models for generative artificial intelligence, or foundational models, are built and expanded over time, they require much larger quantities of human capital resources as well as computing power.

Human capital resources, in this context, refer to human-generated content that is included in generative artificial intelligence foundational models such as books and movies or even online articles, like this one.

In fact, some prominent authors have sued OpenAI for unauthorized use of their written works.

Although generative artificial intelligence models can respond to free-text human inputs with human-sounding outputs, they can be inaccurate or even entirely fabricated as two lawyers in a federal courthouse in Manhattan found out this year.

Part of that is the nature of any emerging technology that relies on human inputs as well as the fact that many of the publicly available models are more of a proof-of-concept about the potential of generative AI and not tailored to a specific purpose.

In that light, abuse of generative artificial intelligence is also a topic of the report.

Some examples detailed in the report of how nefarious actors could use the technology include:

- Augmenting criminal activities by generating more convincing scams, malicious code, and deceptions

- Tricking consumers into sharing personal data for advertising or manipulation through enhanced phishing capabilities with voice, image, and video deepfakes

- Enabling scammers to efficiently produce high volumes of convincing text

Additionally, the Governor's Executive Order required a classified joint risk analysis of threats to and vulnerabilities of California's energy infrastructure.

Existing state law already requires additional precautions be taken by state agencies using high-risk automated decision systems defined as the following:

“High-risk automated decision system” means an automated decision system that is used to assist or replace human discretionary decisions that have a legal or similarly significant effect, including decisions that materially impact access to, or approval for, housing or accommodations, education, employment, credit, health care, and criminal justice.

Government Code § 11546.45.51

This is only an initial report about generative artificial intelligence and the Governor's Executive Order also requires the following topics for additional reports like this one:

- A Risk-Analysis Report directing state agencies and departments to perform a joint risk analysis of potential threats to and vulnerabilities of California’s critical energy infrastructure by the use of generative artificial intelligence

- A Procurement Blueprint Report to support an innovative system inside the state government. State agencies will determine and issue general guidelines for public sector use and required training for application of GenAI that build on the White House’s Blueprint for an AI Bill of Rights and the National Institute for Science and Technology’s AI Risk Management Framework

- A Deployment and Analysis Framework Report to develop guidelines for agencies and departments to analyze the impact that adopting GenAI tools may have on vulnerable communities using California Department of Technology approved environments or “sandboxes” to test projects

- A State Employee Training Report to support California’s government workforce and prepare for the next generation of skills needed to thrive in a GenAI-inclusive economy. Agencies will create training for state workers to use state-approved GenAI to achieve equitable outcomes and will establish criteria to evaluate the impact of GenAI on the state's workforce

- A GenAI Partnership and Symposium Report establishing a formal partnership with the University of California, Berkeley and Stanford University to evaluate the impacts of GenAI on California and what efforts the state should undertake to advance its position in the industry. The state and universities are expected to host a joint summit in 2024 to engage in meaningful discussions about the impacts of GenAI on California and its workforce

- A Legislative Engagement Report to work with the state Legislature to develop focused policy recommendations for responsible use of artificial intelligence

- Evaluate Impacts of AI on an Ongoing Basis Report to evaluate the potential impact of GenAI on regulatory efforts under the respective agency, department, or board’s authority and recommend necessary updates

“Through responsible planning and implementation, GenAI has the potential to enhance the lives of Californians,” explained Liana Bailey-Crimmins, Director of the California Department of Technology. “The State is excited to be at the forefront of this work. With streamlined services and the ability to predict needs, the deployment of GenAI can make it easier for people to access government services they rely on, saving them time and money.”